AI and Public Policy

Research

My technical research spans machine learning security, natural language processing, and applications to defense and policy challenges. This work combines theoretical insights with practical implementations for real-world impact.

Key Research Areas:

Cost-Sensitive Classifiers: Developed novel adversarial attacks against classifiers where different types of errors have different costs, using game theory to analyze attacker-defender scenarios. Published at NeurIPS 2019 Workshop on Safety and Robustness in Decision Making. Available via arxiv.

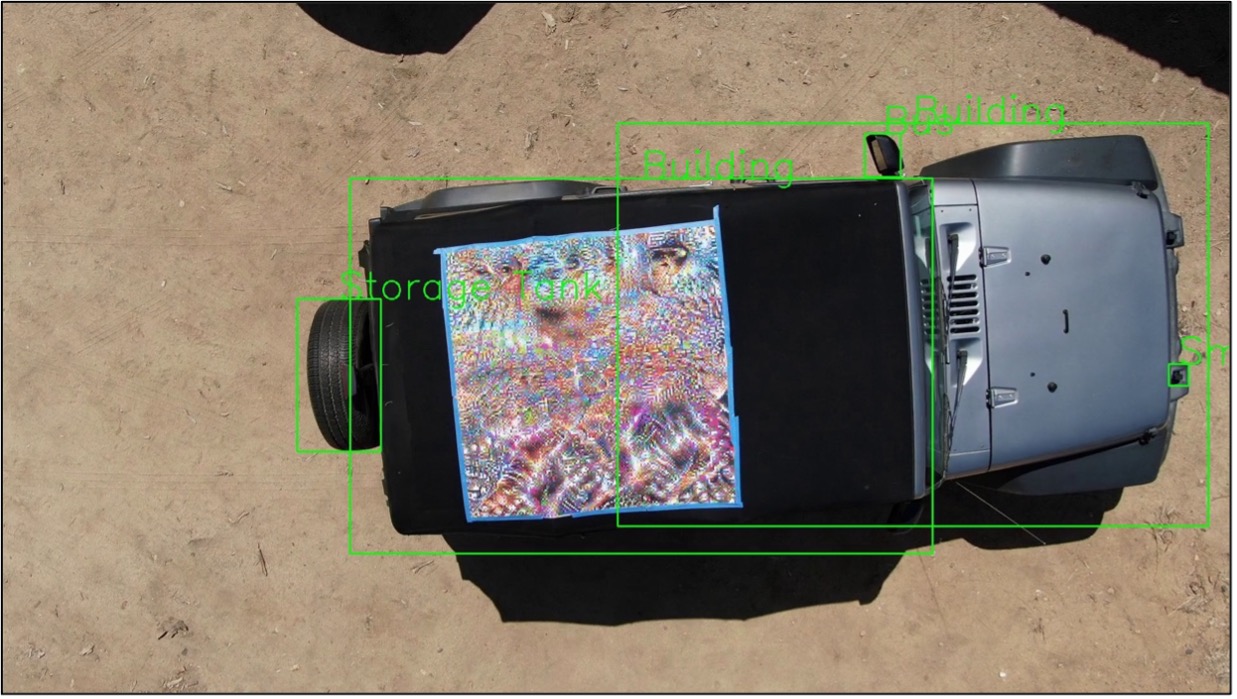

Real-World Adversarial Threats: Led comprehensive research assessing the practical feasibility of adversarial attacks against AI systems. This included empirical evaluation of physical adversarial patch attacks against overhead object detection models, demonstrating significant challenges in transferring digital attacks to real-world scenarios. The work informed broader RAND reports on operational feasibility and defense considerations. Available via arXiv and RAND reports: Operationally Relevant Artificial Training for Machine Learning, Operational Feasibility of Adversarial Attacks Against Artificial Intelligence.

Policy2Vec - NLP for Defense Policy: Well before the advent of LLMs, I developed natural language processing tools to analyze large-scale defense policy documents. Used web-scraping to compile a corpus of ~9,000 official US government policy documents, then applied Doc2Vec embeddings and hierarchical clustering to enable semantic search and document similarity analysis. This work demonstrated how modern NLP techniques can help policy researchers navigate vast bureaucratic document collections. Available on GitHub with technical documentation.

AI Policy & Community Leadership

Beyond traditional research, I’ve contributed to advancing AI policy and safety through community building and direct engagement with leading AI organizations.

LLM Red-Teaming:

- OpenAI: Contributed to red-teaming efforts for GPT-4, with acknowledgment in the model’s technical documentation

- Meta: Conducted red-teaming assessments of Meta’s language models

RAND AI Study Circle:

- Founded and organized a company-wide AI seminar series that became a cornerstone of RAND’s AI research community

- Grew from an academic journal club to a major organizational initiative

- Received strong endorsement from RAND leadership and helped establish RAND’s expanded role in AI policy research